Sight and hearing dominate AI research, while smell has been severely neglected.

Electronic Nose (E-Nose) forms a unique “olfactory fingerprint”, opening a new path for AI olfactory perception.

This study explores the cross-modal generation path from “odour” to “image” to bridge a critical gap in AI perception.

research questions

How to extract odour features from e-nose data using machine learning?

How can this data be fed into the diffusion model for image generation?

Part.02

Methodological and technical pathways

System Process Overview

Data Processing and Coding

Model Architecture and Generation Strategy

System Process Overview

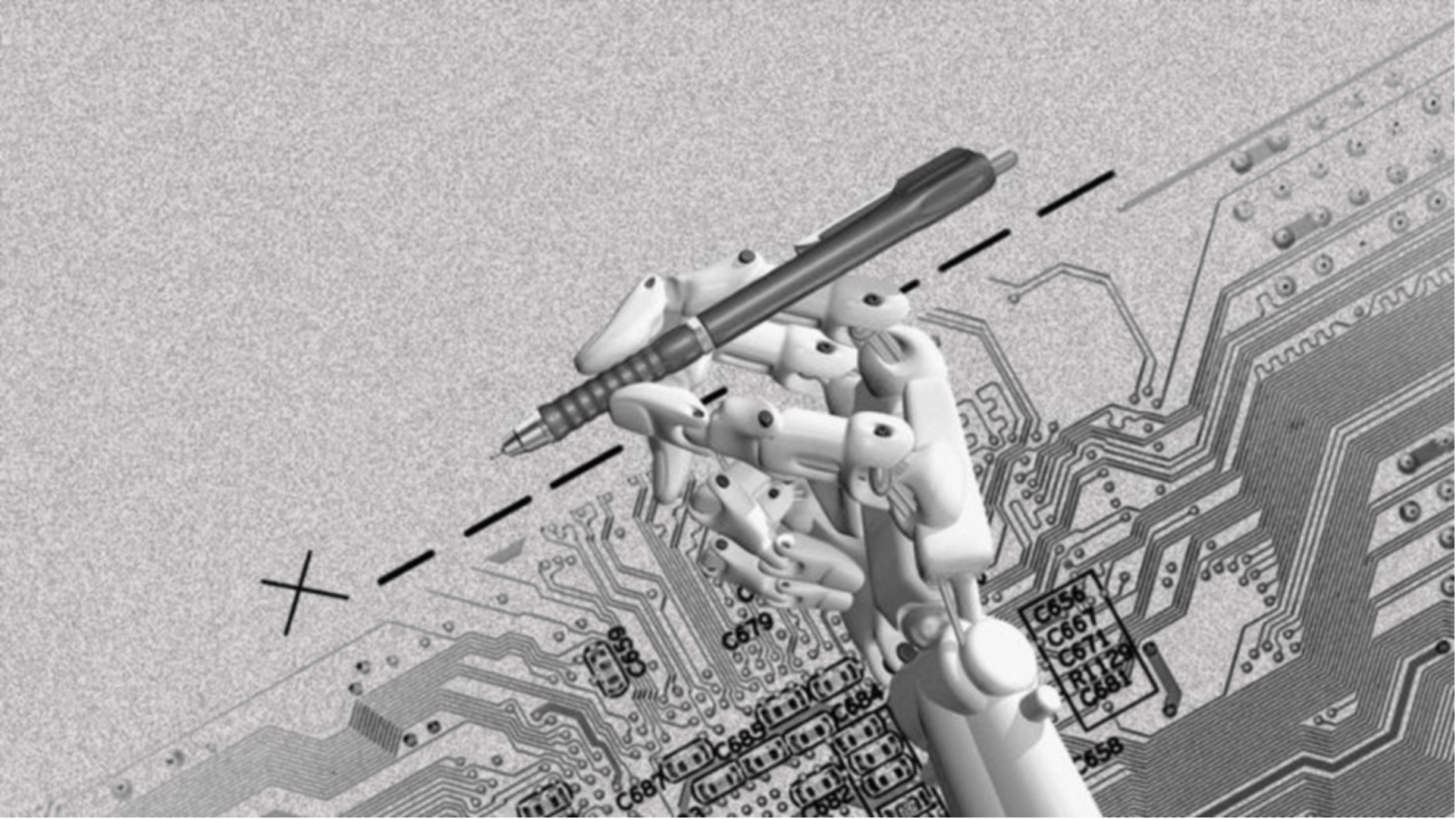

This study proposes a multi-stage generation pipeline from sensor acquisition to image generation.

Electronic Nose Collects Odour Data GAF image encoding CNN extracts features Transformer to generate potential embedding Diffusion Model to synthesise images

Data Processing

and Coding

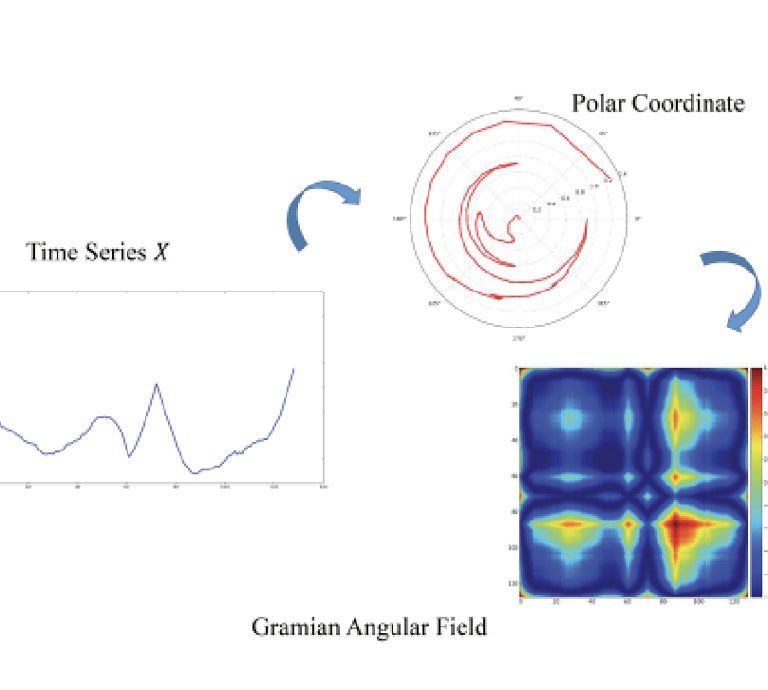

Convert time series data to GAF images to preserve temporal dynamics.

GAF images have structural features recognisable by convolutional neural networks.

Figure 1: Illustration of the proposed encoding map of Gramian Angular Field(Wang, Z., & Oates, T.,2015)

Sensors → GAF

→ image dataset

Model Architecture and Generation Strategy

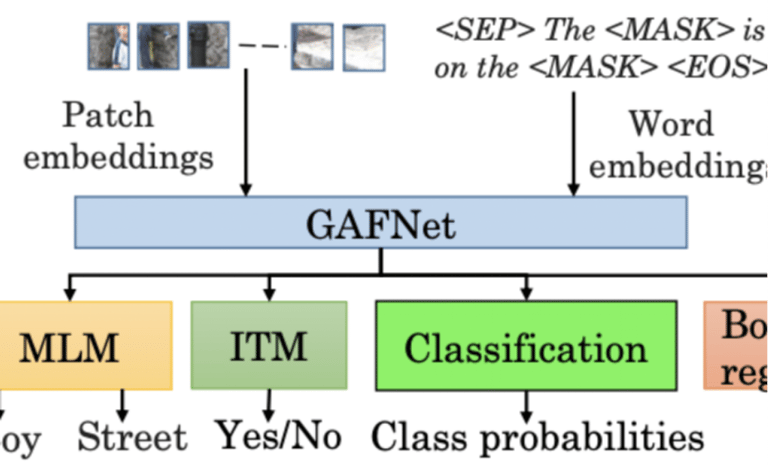

Transformer extracts the semantic embedding of GAF images.

Figure 2. Pretraining approach(Susladkar et al.,2023)

GAN or Diffusion

Diffusion Model generates diverse and stable images under embedding guidance.

Diffusion was chosen over GAN due to its higher generation quality and training stability.

Part.03

Methodological details

and innovations

Introducing Local Discriminative Subspace Projection (LDSP) to address sensor drift.

‘Combining real and synthetic data for training to compensate for data scarcity.’

Expected Outcomes and Impacts

Building an image generation system with “digital olfaction”.

Advancing AI embodied intelligence to fill the olfactory perception gap.

Part.04

Rationale and Outlook

Theoretical support and reference systems

Conclusions and outlook

Theoretical support and reference systems

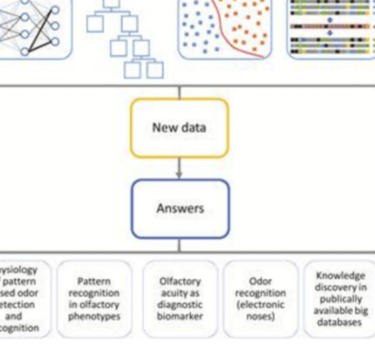

Drawing on five types of olfactory AI research pathways (Lötsch et al., 2019)

Using a biomimetic olfactory chip with multi-sensor array technology as a hardware base

Figure 3. Overview about approaches to data processing (Jörn Lötsch et al., 2019)

Conclusion

and Outlook

This study expands AI perceptual boundaries to enable the translation from abstract olfaction to intuitive vision.

Provides a new perspective for cross-modal AI research, which can be extended to more multi-sensory interaction systems in the future.